In the Ascent of Man, mathematician Jacob Bronowski describes a conversation in London with the legendary John von Neumann, some time after von Neumann published his Theory of Games and Economic Behaviour with Oskar Morgenstern in 1944.

“You mean, the theory of games like chess?” Bronowski asked, after they had settled into their London cab.

“No, no,” von Neumann replied, “Chess is not a game. Chess is a well-defined form of computation. You may not be able to work out the answers, but in theory there must be a solution, a right procedure in any position.”

“Now, real games,” he said, “are not like that at all. Real life is not like that. Real life consists of bluffing, of little tactics of deception, of asking yourself what is the other man going to think I mean to do. And that is what games are about in my theory.”

Bronowki was a chess player; von Neumann a poker player. His book on game theory included a chapter called ‘Poker and Bluffing’ — by all accounts, von Neumann played poker only occasionally, and not very well at that. But he recognised that poker was a fundamentally richer game than chess, and he built his theory around a stripped-down version of the game.

Decades later, professional poker player Annie Duke retells this story in her book Thinking in Bets, and argues:

The decisions we make in our lives—in business, saving and spending, health and lifestyle choices, raising our children, and relationships—easily fit von Neumann’s definition of “real games.” They involve uncertainty, risk, and occasional deception, prominent elements in poker. (…)

Chess, for all its strategic complexity, isn’t a great model for decision-making in life, where most of our decisions involve hidden information and a much greater influence of luck. This creates a challenge that doesn’t exist in chess: identifying the relative contributions of the decisions we make versus luck in how things turn out.

Poker, in contrast, is a game of incomplete information. It is a game of decision-making under conditions of uncertainty over time. (Not coincidentally, that is close to the definition of game theory.) Valuable information remains hidden. There is also an element of luck in any outcome. You could make the best possible decision at every point and still lose the hand, because you don’t know what new cards will be dealt and revealed. Once the game is finished and you try to learn from the results, separating the quality of your decisions from the influence of luck is difficult.

Duke gets it mostly right. In her book, she argues that we have more to learn from poker than we do from chess. In chess, whenever we make a mistake, it is possible to pinpoint what the mistake was, and when during the game it was made. But in poker, as in life, mistakes and outcomes may sometimes be unrelated — for instance, it is possible to blunder in poker and yet still win against a world champion; conversely, it is possible to ‘play perfectly’ and lose the entire game.

Learning in poker is also more reflective of the real world: at the highest levels of chess, making a mistake leads to an observable, predictable outcome that you may study; in poker, you may play a hand but have your opponents fold; this means you walk away with no idea if you played well or badly.

Duke’s solution, of course, is to focus on explicit decision analysis. Poker demands that we learn probabilistic thinking, and it requires us to separate the evaluation of our outcomes from the quality of our decision making. Thinking in Bets covers many of these techniques, and Duke now teaches her methods to business executives beyond the world of poker.

Duke’s book is very much in line with mainstream decision theory. The book argues that our brains are evolved for less complex environments, and that we rely on heuristics — mental shortcuts! — that cause us to have cognitive biases. In turn, these biases lead to errors in judgment that prevent us from achieving our goals.

This might not be surprising to you, especially if you’ve spent any time reading up on decision making — if you have, in addition to Duke’s book, read Daniel Kahneman’s Thinking: Fast and Slow, if you’ve gone through Phillip Tetlock’s Superforecasting, or if you are familiar with Richard Thaler’s Nudge. These authors argue that the best response to this quandary is to find ways to work around our brain's deficiencies, in order to make ever more rational judgments and decisions.

In simple terms, if you have read only these books, you would likely conclude that heuristics should be frowned upon. Heuristics lead us to cognitive biases, which in turn prevent us from making good decisions; good decisions are necessary to achieve our goals; therefore, we should be suspicious of the heuristics we lean on if we want to succeed in life.

But Heuristics Are How We Think

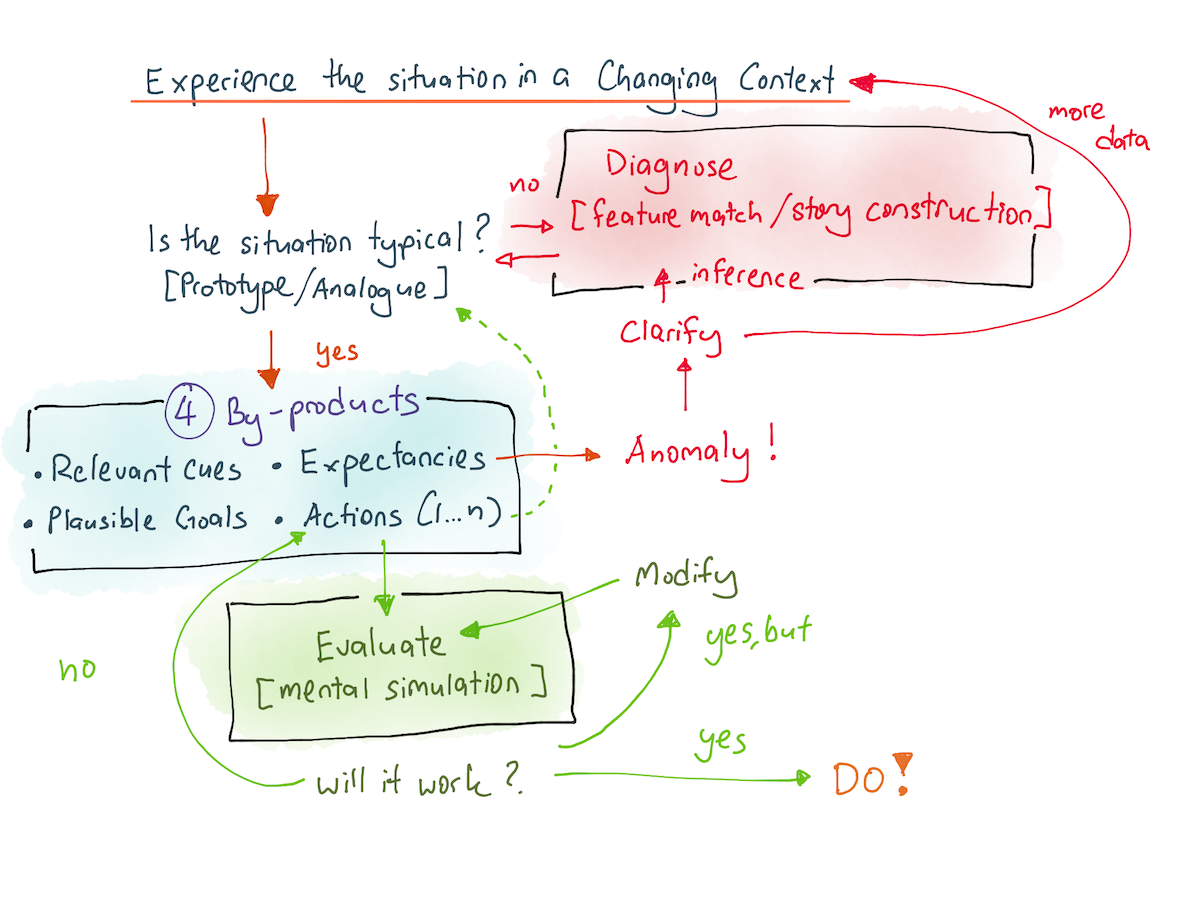

Over the past four weeks, we’ve gone through a series of posts on the nature of tacit knowledge, and how this is related to the pursuit of expertise. In Part 2, for instance, I described the recognition-primed decision making (RPD) model as a model for tacit expertise, and gave several examples of using it to extract the tacit knowledge of experts. In last week’s post, I talked about how an understanding of RPD might help us extract tacit knowledge from YouTube — YouTube! — in fields as diverse as Judo, computer programming, and music.

But sharp readers might have noticed that RPD is completely dependent on heuristics. As Gary Klein writes in Sources of Power:

The core of the RPD model is a set of heuristics previously described by Amos Tversky and Daniel Kahneman: the simulation heuristic (1974), used for diagnosis and for evaluation, and the availability and representativeness heuristics (1980), for recognizing situations as typical.

In fact, the only explicit analysis that occurs during the recognition-primed decision cycle is when you’ve pattern matched the situation against a prototype in your head, and you’ve moved on to the evaluation and generation of an action script.

This view of decision making is quite different from the previous one. In the previous view, heuristics are suspicious. In this view, heuristics are simply how our brains work. In fact, when someone tells you to acquire expertise, what they’re really saying is “go lean into your heuristics”! And when an expert tells you “it just felt right”, what they’re really saying is that they pattern matched against what is available to them (availability bias); they made sense of the developing situation by generating a narrative (narrative bias), and then they executed a solution they had simulated in their heads (simulation heuristic).

For all the attention that Duke spends on explicit decision analysis in poker, actual poker performance seems to be as dependent on prototype recognition and expert intuition as chess is. As Palomaki et al, 2020 notes:

A corpus of anecdotal evidence suggests that since the poker environment is complex and fast paced, players need to trust their intuitions or “gut feelings” when making a decision (e.g., Brunson, 2005; Tendler, 2011). These feelings can also be called affective heuristics (Finucane et al., 2000) —that is, “unconscious” processing of task-relevant information experienced phenomenologically as good or bad “feelings” about a situation. It has been empirically established that chess masters, too, often rely on an intuitive “feel” for different moves and assessment of the “board as a whole,” especially in the mid-game where options for various moves are astronomical (e.g., Chassy & Gobet, 2011; Gobet & Chassy, 2009) and it is futile to attempt to work through the alternatives step- by-step in working memory.

And then they quote World Series of Poker champion Doyle Brunson, who says:

Whenever I . . . “feel” . . . I recall something that happened previously. Even though I might not consciously do it, I can often recall if this same play . . . came up in the past, and what the player did or what somebody else did. So, many times I get a feeling that he’s bluffing or that I can make a play and get the pot. [My] subconscious mind is reasoning it all out.

Which is similar to the kinds of things that experts say whenever you ask them about their tacit knowledge.

Putting The Two Together

So how do we square these two views together? The unsatisfactory answer here is that I don't yet know.

In 2009, Daniel Kahneman and Gary Klein published a paper titled Conditions for intuitive expertise: a failure to disagree. In it, they outlined the history of their respective approaches, and then argued that expert intuition may only be trusted when:

- The environment has high validity — that is, when there are adequate valid cues that may be learnt in the environment, and

- There are sufficient opportunities to learn those relevant cues.

In giving these two requirements, Kahneman and Klein argue that domains like political forecasting and stock picking fail requirements 1 and 2, while domains like chess, medicine and firefighting, do not.

(As an interesting aside, they also argue that domains with high levels of uncertainty or information hiding may still be considered high validity — and here they give the examples of poker and warfare. Palomaki et al, on the other hand, argue that poker is ‘medium validity’, because poker's short-term outcomes are highly dependent on luck.)

Kahneman and Klein’s paper is probably one of the most important papers to have come out of the naturalistic decision-making and heuristics+biases academic traditions — but it is only useful up to a certain point. What we really want to know is how Annie Duke — and others like her — are able to blend the analytical rigour of decision analysis with the rapidity of recognition-primed expertise. Can we generalise their methods to other skills? And if so, how do we adapt them to our careers?

I don’t yet know the answers to these questions, or what they imply for successful stock pickers and hedge fund managers — two roles that, per Kahneman and Klein, operate in a domain where expert intuition cannot be trusted. What I do know is this: heuristics aren’t good. They aren’t even bad. They are, in a word, OK. Heuristics are simply part of our cognition. And the quicker we accept that we must lean into them in the pursuit of expertise, the easier it will go for us.

See also: The Dangers of Treating Ideas from Finance as Generalised Self Help.

Originally published , last updated .

This article is part of the Expertise Acceleration topic cluster. Read more from this topic here→