A pretty useful lens for when a business activity is easy to measure, and when it is not. Part of the Becoming Data Driven in Business Series.

Note: this is Part 10 in a series of blog posts about becoming data driven in business. You may want to read the prior parts before reading this essay.

On August 17, 2023, McKinsey published a report titled Yes, You Can Measure Software Developer Productivity, written for large company executives, proposing a set of four new metrics that McKinsey claimed had been tested in 20 companies. These metrics were:

- Inner/Outer Loop Time Spent — the time a programmer spent building actual software (the inner loop) vs the time spent deploying, integrating, and aligning with security and other organisational objectives (the outer loop).

- Developer Velocity Index Benchmark — a proprietary (to McKinsey!) survey that measures ‘an enterprise’s technology, working practices, and organizational enablement and benchmarks them against peers.’

- Contribution Analysis — a measure of ‘contributions by individuals to a team’s backlog (starting with data from backlog management tools such as Jira, and normalizing data using a proprietary algorithm to account for nuances)’, designed to ‘surface trends that inhibit the optimisation of that team’s capacity.’

- Talent Capability Score — a score that reflects ‘individual knowledge, skills, and abilities of a specific organisation’ that is based on ‘industry standard capability maps’.

All of these metrics were presented with a beautiful sheen of legitimacy, in the way that management consulting outputs so often are.

The Kent Beck Measurement Model

The report lit a fire in the dev communities I’m plugged into: just about every credible software leader I respected had bad things to say about it. Two weeks later, Kent Beck and Gergely Orosz published a two-part response (links one, two), and introduced something I’m going to call Beck’s Measurement Model:

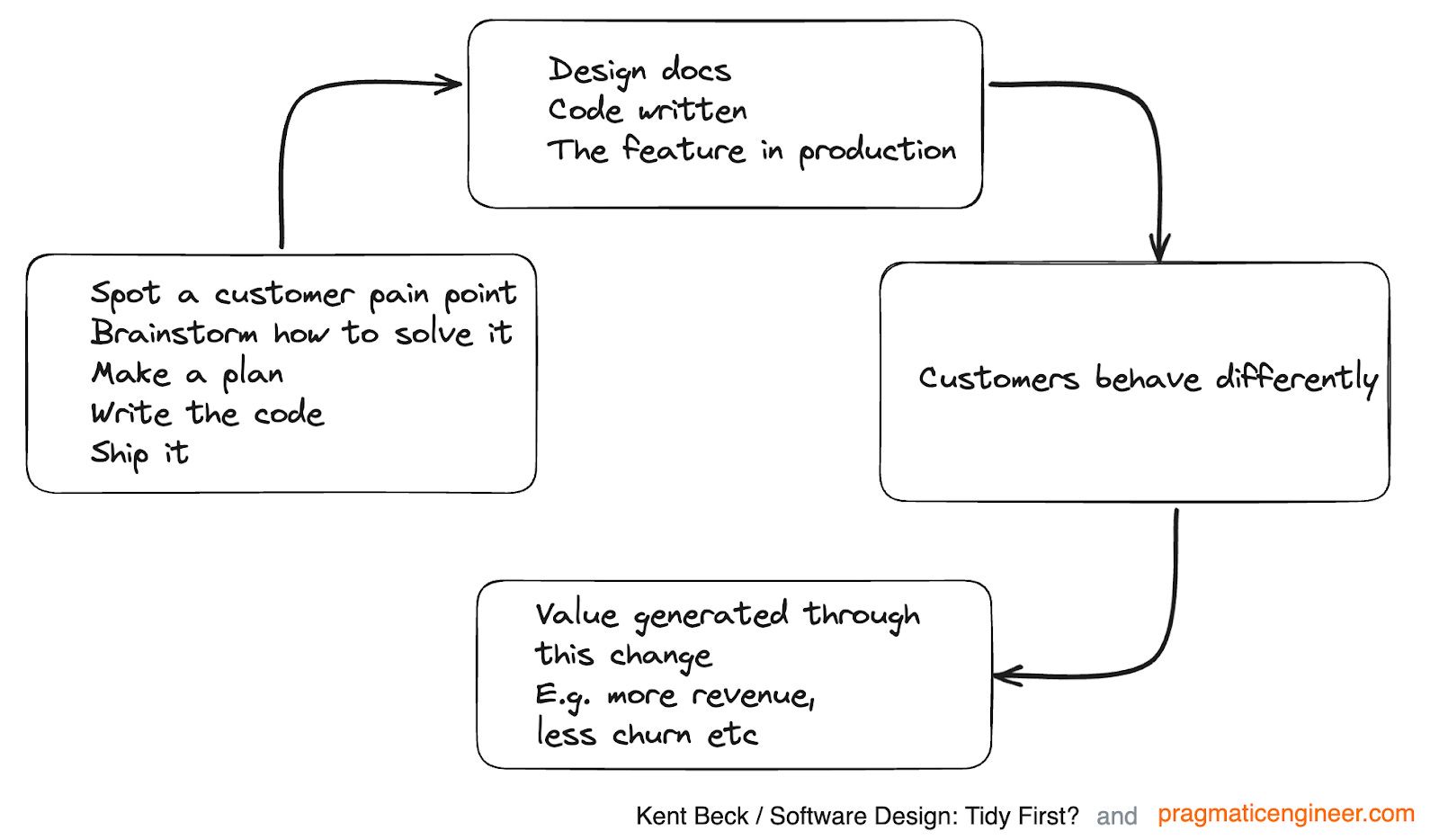

How does software engineering create value? Take the typical example of building a feature, then launching it to customers, and iterating on it. What does this cycle look like? Say we’re talking about a pretty autonomous and empowered software engineering team working at a startup, who are in tune with customers:

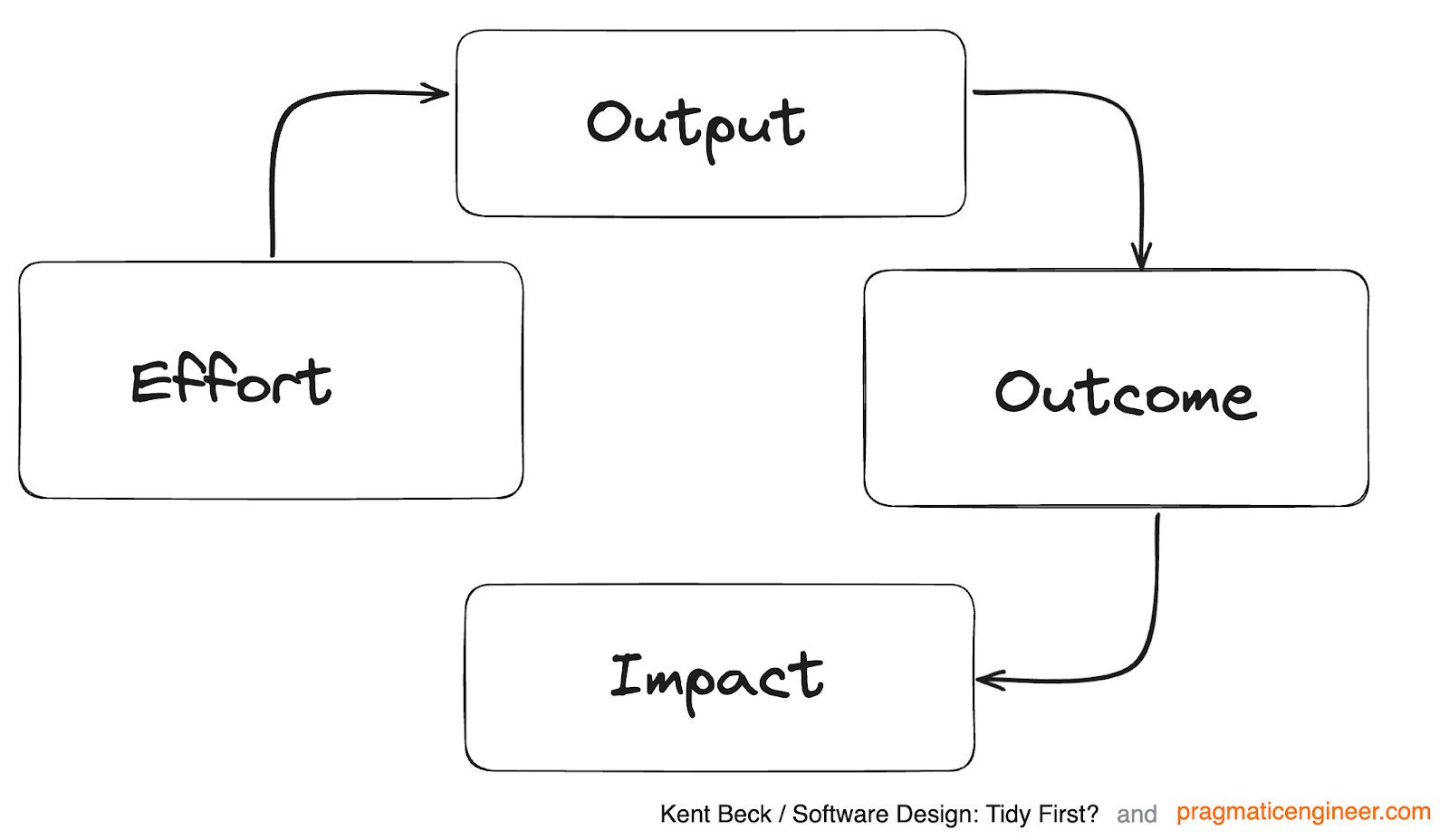

We start by deciding what to do next, and then we do it. This is the effort like planning, coding and so on. Through this effort, we produce tangible things like the feature itself, the code, design documents, etc. These are the output. Customers will behave differently as a result of this output, which is our outcome. For example, thanks to the feature they might get stuck less during the onboarding flow. As a result of this behavior change, we will see value flowing back to us like feedback, revenue, referrals. This is the impact.

So let’s update our mental model with these terms:

Beck’s Measurement Model is the concept that I want to highlight here. It is illuminating, and wonderful. In member discussions of the Becoming Data Driven in Business Series I have wondered — like many — about the difficulty of measuring software development compared to sales, recruiting, and customer support. I’ve also puzzled over the applicability of the process control approach to software development.

Originally published , last updated .

This article is part of the Operations topic cluster, which belongs to the Business Expertise Triad. Read more from this topic here→