John Cutler is currently the head of product education at Amplitude. An important part of his job is to act as a consultant to product teams that use Amplitude; over the course of his entire career, he’s seen and helped hundreds of product organisations.

Cutler’s superpower is that he is able to talk to a set of product people and — within 8-20 minutes — figure out the system dynamics of their organisation, and then suggest a bunch of experiments to make that system better. His interventions usually take on the form of a prioritised list of experimental changes or team interventions. And he can do this for pretty much any product team in pretty much any type of company.

Of course, this isn't to say that John gets it right 100% of the time — experts are fallible, after all. But it is a pretty remarkable ability — he points out that often, product leaders assume that their best practices scale universally; they don’t realise that different teams in different industries in different cultures around the world have vastly different product practices, all of which might work for them, depending on a host of factors. Cutler doesn’t make such mistakes — or at least he doesn’t make as much of them any more; he’s seen too many possible product org configurations to count.

Sometime in the last year, he attempted to write a book about his skills. And then he came up against a wall. Like most experts, Cutler’s expertise is intuitive and context-dependent. Give him a product team and he's able to dig into their issues; ask him to explicate what he does into a simple framework, and he struggles.

Two months ago, we starting talking on Twitter. I told John that there was a whole set of techniques designed to extract tacit mental models of expertise, and that he was welcome to give them a try. I said that the simplest technique was something called ACTA, or Applied Cognitive Task Analysis, designed by Laura Militello and Robert Hutton in 1998 for practitioners (as opposed to trained researchers). I suggested that I could give it a go with him.

John said yes.

A Quick Recap of ACTA

ACTA is a protocol of three interview methods and a presentation format, designed to help a practitioner extract information about the cognitive demands and skills required for a task. The three interview methods are:

- You first create a task diagram. This is a broad overview of the task in question, and identifies the difficult cognitive elements.

- You do a knowledge audit — which identifies all the ways in which expertise is used in a domain. You also extract examples based on actual experience.

- You do a simulation interview — you give the expert a simulation of a single incident, and then probe the expert’s cognitive processes over the course of that simulation.

Finally, you present the extracted information in a cognitive demands table.

Due to my lack of experience using the technique, I only performed the first two tasks with John. I didn’t know how to create a simulation for us to examine together — and in fact I said that this was the most tricky bit of ACTA in my original post on the technique. In retrospect, perhaps I should have asked John for a recording of a product team engagement (though this might violate privacy or ethical rules), or arranged for a call with an actual product team, who was willing to be subject to an ACTA study.

I’ll give this more thought the next time I run ACTA on a willing expert.

What We Extracted

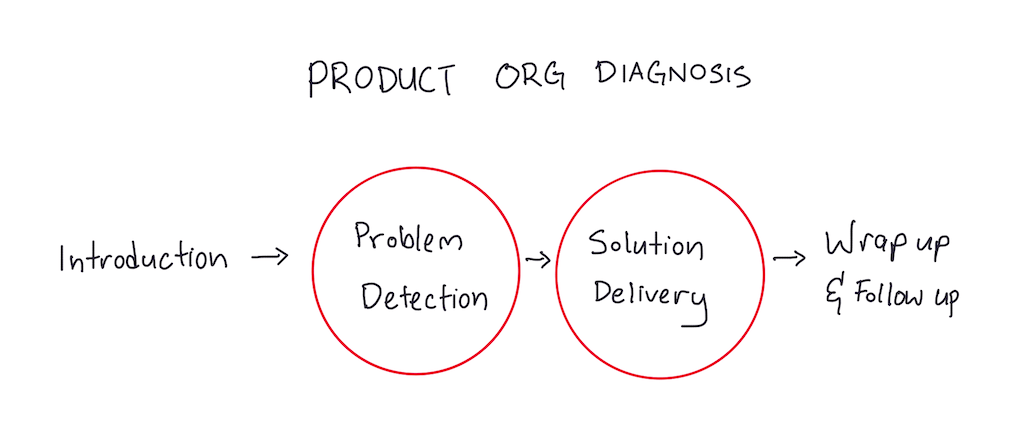

Cutler’s product calls consist of the following four stages:

- Introduction — John opens up the call and gets some pleasantries out of the way. Usually these teams come to him with some problem in mind, so he lets them start off with their issues.

- Problem Detection — John pattern matches by asking questions, letting his intuition guide where to go and what to dive deep into. Sometimes he uses humour to open up the conversation. In many cases John will do a first pass of anti-patterns — that is, problems that are really common — and then let the conversation develop from there. This part takes anywhere between 8-20 minutes. If by the 20 minute mark he isn’t able to map out a few problems, this is likely a rare edge case — a unique organisation that he can't help.

- Solution Delivery — Based on the problems unearthed in Step 2, John will suggest a prioritised list of experiments to try.

- Wrap up and follow up — There may or may not be a follow-up, depending on the nature of the engagement.

We identified Steps 2 and 3 as the parts with the highest cognitive demands:

- Step 2, Problem Detection — A novice would not have John’s intuition on what to dig deeper into, and what to ignore. John is able to respond to the gestalt of the person or team he’s talking to — “the best product teams in the world — there’s a way these people talk about their risks … it’s just a much more structured deliberate way of describing it versus a more kind of frantic way of describing it.” John is also able to get into the heart of the problem more efficiently than a novice would.

- Step 3, Solution Delivery — Some product team problems are easy, or ‘acute’, and these tend to be simpler to diagnose and then fix. A novice could be taught what recommendations to deliver here. But some product team problems are ‘chronic’, or more accurately described as complex systems problems, where multiple things are bad all at once (e.g. bad processes, and no decision power, and technical debt, and bad org infrastructure, and a toxic leader, etc). In such cases, a novice would not be able to prioritise a list of possible interventions the way John would be able to.

Of the two steps, we decided to focus on Step 2, Problem Detection. This was partly due to time constraints, but also because it seemed more in line with John’s goals for his book (as I understood it, he intended it to be a book on how to do what he does when he’s diagnosing the problems of product teams — and the problem detection step is what seems to be the most mysterious part of his expertise).

The Knowledge Audit

ACTA’s knowledge audit breaks down expertise using six-to-eight different probes.

We ended up doing five probes, due to the nature of John's expertise:

| Aspects of Expertise | Cues and Strategies | Why Difficult? |

|---|---|---|

| Past & Future Able to quickly tell how the org got to its current state, and predict what problems it might be facing going forward. |

John's first pass is always to detect anti-patterns. “Bad product orgs all kinda look the same, good orgs can succeed for very different reasons.” Then, work backwards from the anti-patterns to figure out how the org got here. First detect if there is high Work In Progress (WIP). Usually this presents itself with symptoms or complaints like ‘low morale’, or ‘no growth mindset’, or ‘no learning’. John uses these complaints to figure out if the team has high WIP — which is nearly always a sign of a problematic product org. If high WIP exists, then he works backwards to figure out how the org got there. (60% of the time, John says, a bad org has high WIP.) The other 40% of the time, John is able to quickly suss out other possible contributors to those problems (see Big Picture below) |

A novice may be able to tell if there is high WIP, but there are many ways that orgs may end up with high WIP, and diagnosing how it got there is difficult. Also difficult because ‘bad’ practices might not be really bad, and must be evaluated within the context of the org. |

| Big Picture Able to rapidly construct a gestalt that includes a number of factors, like: decision authority, decision power and structure in the org, org culture and demographic (country) culture, product org structure and workflow, org ability to learn, existence of product strategy and business model. |

Decision Authority

— Who really holds the power in the org? What is the shape of the decision authority, and how many stakeholders are involved when making a product decision? - John will ask further questions to clarify if a product owner really has final authority over product decisions. - Related: where does this org lie on the extremes of product ownership? One extreme: product team owns $20m worth of business. Other extreme: product team has to have 5 meetings and 3 stakeholder sign-offs for a new ticket to be created in Jira. Organisational learning ability — check if product leader/team is comfortable with uncertainty, and can talk about current hypotheses and experiments in play. - “tell me your last 5 big product decisions” — speed at which the product leader is able to answer gives John information about a) how quickly does the org ship/learn/decide? b) who has decision authority, c) how they think. If they have to pause to remember, likely they have not shipped recently, or perhaps person is not very in tune with the org, and so on. - Know the extremes of structured learning; e.g. on one extreme, there is mob programming, where nobody works on their own projects, extreme collaboration. Other end: everyone owns a specific project. Both exist and can work, in different industries. - Does the product team or the software engineers have direct customer contact? Is yes, or no ("why would we want to do that?!")r, this tells John something about the org. Seriousness of product engagement — If the company wants to use Amplitude or other analytics tool, John checks if this is a mainline activity or a vanity project, instead of being impressed by the absolute project budget size. - Conversely, if business say they have an analytics problem, but they’re making a lot of money / are in a monopoly position in the market, they don’t really have a problem. Business model — John will check how the business makes money, in order to judge how important the product team is to the business. - John is able to predict how a business model change will impact the product/eng teams, e.g. a business model change away from a high SEO, high traffic web product would mean changing the experimentation framework, doing less A/B tests, relying more on qualitative data, which means preparing the teams to switch to this new mode of learning and operating. - An expert will know and appreciate the role of luck in finding and executing a successful product strategy. - An expert will understand how a business model mismatch might affect org structure and impact various teams throughout the company. Risk taking — If a company and product team is doing extremely well, John will know to ask ‘how often do your experiments fail?’. Not enough experiments that fail = company isn’t taking enough risks. An expert knows that companies have good times and bad times, and not taking enough risks may bite the product team when the company goes through a bad time. Org culture — Is the company centralised, where HQ sets the culture and local teams don’t get a say, or is HQ more hands off on culture and local teams can set their own culture? This is a trade-off that experts can quickly identify. - An expert will also be able to gauge if the org culture can produce psych safety, even though there are many different types of org cultures (e.g. company is toxic except for product team) - Expert also has large sample of org cultures that exist out there (e.g. some companies you can move freely between teams, some companies you cannot). Demographic / country culture — John matches the org culture with what he knows of the country’s culture (e.g. more individualistic vs more communitarian). Product strategy — Check, in the following order: is there a strategy or not? -> is it halfway plausible or not? -> is it implicit or explicit? -> Is the structure of the company aligned around the strategy of the company/product? - An expert will know when to dig in to tell if the product strategy is plausible. It should be concrete, and centered on real world knowledge, and not fuzzy. |

Decision authority: A novice would take the person’s title at face value, and not know to dig further to get the full picture of decision rights/product ownership. e.g. sometimes 'head of product' has no real power!

- Shape of decision authority/org structure: Novice might not know all the variations of possible org structures, and many org structures can actually work — you just have to know what the right questions to ask for each. e.g. hard to have extreme product ownership in healthcare — there are many stakeholders — and this is ok. Organisational learning ability: Novice would not pick up on cues that the product leader has ‘coherence’, and is comfortable with uncertainty. Novice will be distracted by buzzwords, confidence, or vision of the product leader. - Novice may assume that the way they interact with customers in their previous or current company applies to everyone, e.g. if in their previous company they implemented whatever the customer wants, they might not realise that some product companies do not build what customer wants, but instead seek to understand them, and build product to improve their world, but not necessarily build whatever they ask for. Seriousness of product engagement: Novice would be impressed by absolute budget size for analytics or Amplitude engagement and use it as a measure of how likely the project will succeed. Business model: Novice might not know to check the business model when diagnosing a product team (business model affects the product team’s structure and importance). - Novice might know all the names of business models, but not work out all the implications of business model change on the product team. - Novice might underestimate what it actually takes to do a business model shift mid-stream in a large company. Risk taking: Novice might see a successful company and not think about their risk-taking levels, and how this might affect them going forward. Org Culture: Novices might not know all the possible configurations of org culture that can work. (And there are many e.g. maybe the company is toxic, but the product team is safe haven, etc.) Demographic/country culture: Novice would not have enough samples or experiences with different country cultures to know how the differences might affect team dynamics. Product Strategy: Novice might not know to check the product strategy at all levels as per John’s flow chart. - A novice might miss the cues to dig in deeper and crisp up on the details of the product strategy. |

| Noticing Able to pick up on attitudes and worldviews held by good product teams. Able to place them on a spectrum of org health, based on a large n of observed orgs. |

Notice the team’s comfort with uncertainty — do they have a structured approach to the known unknowns? (John calls this ‘coherence’). A good product team has productive things to say about what is unknown ("we know this feature would do well, but we're not sure about that feature, but we have experiments going on for it"); a bad product team talks in generalities about the future. Notice when the company has ‘flow’. Flow is a word John uses to describe when the company is executing and learning smoothly, and they are rapidly moving through the execution cycle, yet are agile and can change directions in response to new information. (Related to the ‘walk me through five of your last big product decisions’ question) - This is a complex thing to notice, John can quickly identify it. He breaks it down some of the cues he notices as “do they have high decision quality, high decision flow; do they have available and excessive data, do they have clean data, do they have the ability to interpret the data, the ability to link cause and effect, do they have enough data, do they have diverse perspectives, do they have the information they need to operate, are they able to make sense of it and align on it, and build coherent shared understanding, can they harmonise decisions and missions and can they find a compelling, overarching story or strategy.” Able to notice when a product leader is at the stage where they are naive — if they are enamoured by product frameworks but have yet to put them to practice, this is usually a signal that they are still novices. “Good product people put all these things to practice (from the frameworks), but they don’t talk about the frameworks. It’s just something they do”. Quickly places the team on a spectrum, because John knows the extremes of each factor, and the contexts where they may or may not work. E.g. There are extremely centralised product teams and extremely decentralised product teams, and both can work. This also applies to culture, and decision authority, and so on. |

Comfort with uncertainty: a novice might not pick up on cues, because it isn’t about what a person says, it is how they say it, and what that implies about how they think. Flow: Novices would not be able to pick up on all the cues that indicate if a team has ‘flow’. Detecting naive product leaders: Sometimes naive product leaders will sound thoughtful and insightful — they will quote many frameworks. They are also likely to believe that they ‘just’ have to sell it to their org and put it to practice and everything would work. In practice it’s more difficult; good product teams typically do not talk about such frameworks even though they live it. A novice would have difficulty knowing the difference between the two. Extremes of Big Picture factors: Novices would not know where on a spectrum a given product team would lie. |

| Job Smarts John is able to ask the minimum set of questions that generate the maximum set of insights. |

Able to ask ‘Powerful Questions’ to cut through the mess. Powerful questions is John's name for questions that are: neutral, have no right or wrong answers, do not pass judgment, but ‘crack the problem at the biggest spot to understand what is going on in the org’, and usually ‘nail a tradeoff or polarity between two opposing forces’.

- Example of a weak question: ‘please tell me about the M&A that you do’, vs a powerful reframing: ‘given your pursuit of M&A at this particular moment, talk to me about the centralisation and decentralisation of your services to support that.’ and ‘it seems your company is really good at logistics, but what did it give up to get there?’ and ‘tell me about the last five consequential product decisions that you did’ (inability to tell John what these are, off the top of their heads, tells John something about the structure and velocity of the product team). - Powerful questions will provoke a thoughtful answer from a senior person who is a good systems thinker. Junior people will give ‘softer’ answers, that are less multi-faceted or thorough. Use humour to get a reaction. Humour opens up the conversation and allows John to ‘cut through the bullshit and get at the heart of the matter’. - e.g. John jokes about quarters being weird, and when they react to that, it gives him an in to see how they feel about quarterly planning cycles, and if there are problems there e.g. if they keep changing direction every quarter. Show visual guides to provoke reactions and test for systems thinking ability. If they do not respond well to a concept map or flow chart, it is likely they are not a good systems thinker. (e.g. the WIP it good flow chart). |

Novice would not know how to use ‘powerful’ questions, because it requires some knowledge of the factors that are in tension with each other and are at play. Novice would not be able to use humour (or other tricks) to get a response, in order to gauge that response. Novices would not know the range of responses to visuals to identify if the person is a good systems-level thinker or not. Experts like John can predict what a product leader would say — he showed me a list of all possible responses to Marty Cagan’s book. |

| Self-Monitoring John knows the weaknesses of his approach, and is able to tell when he is unable to help the org |

Tends to be biased towards not blaming a specific person. John’s approach focuses on the system of the org, he’s more reluctant to pin the blame on certain people within the system (when there certainly are cases where the person is bad and should be removed). He is aware of this and watches out for it. Able to tell when he is unable to help the org — example of an extreme case: if the org has a tiny market (~100 customers total!), and the particular product really isn’t that important to the success of the company. Took ~20 minutes to diagnose this. |

It’s difficult to judge whether the system is failing the person (by setting them up to fail, incentives/structures not enabling them to do good work) vs the person being the problem. Whereas John would have seen enough working product systems to make a more calibrated call. When John is not able to help an org, it’s usually an extreme edge case. The novice might not be able to tell when it’s an edge case. |

What Was Difficult?

The biggest difficulty with using ACTA was in deciding when to dive deep and let John go on about his experiences, and when to move on to the next set of probes. In our initial call, I let John go on too long while setting up the Task Diagram, and then we ran out of time, so I didn’t do a good job of the Knowledge Audit.

The second call went much better.

I think the biggest mistake that I did was that I grossly underestimated the amount of time it would take to do the whole skill extraction exercise. I scheduled an hour for our first call, whereas a more appropriate length might’ve been two hours. In the end, we did two separate calls together, the first being 1.5 hours long, and the second two hours long. It’s hard to say if these times are normal, but I wouldn’t go below two hours in the future.

The other problem I had with the process was that I was unsure if I was producing useful information. John seemed happy with our progress, but I wasn't so sure.

After our first call, but before our second, I had the good fortune of talking to Laura Militello and Brian Moon, hosts of the NDM podcast. Militello, of course, was one of the two creators of ACTA, and when I asked her if she had any tips for using it, she said to keep the objective of the interview at the back of my head.

“Are you doing this for a training program? Or are you designing some software?”

“This is for his book.” I said.

“Then you should ask the questions with the book in mind.”

This was pretty good advice — in my second call with John, I dropped certain probes, because it was clear that he wasn’t going to be able to put it into written form. I told him that this would be a slightly different interview if he were designing a training program for the novices in his team, which wasn't something that was apparent to me before I’d tried the technique. John agreed that that was a pretty interesting nuance.

My only regret is that I didn’t get to do the Simulation Interview, which I think prevented me from creating a Cognitive Demands table. But I’m still rather happy for a first try — we both got a lot out of the Knowledge Audit alone.

Wrapping Up

Overall, I’m rather pleased with how this ACTA experiment turned out. If anything, it tells me that Militello and Hutton achieved their stated goals with the technique: ACTA was designed for practitioners in the field, and — as a practitioner in the field — I found the learning curve only a little difficult to climb.

I could also tell that John was pleased with the results of our exercise. Shortly after our calls, John tweeted:

my sincerest thank you to @ejames_c

— John Cutler (@johncutlefish) July 11, 2021

for a long time I've felt a big tension between "simple frameworks" (that get everyone excited) and the beautiful mess, where tacit knowledge and experience reign

Cedric shared some techniques that made me realize this is not a trade-off. 🙏

This was something I’d heard directly from him. Near the end of our second session, he told me that he’d always found it difficult to articulate a clear framework for his product ability — which was vastly more nuanced than what he saw published in books and blog posts on the web. But of course he wasn’t able to describe it — experts are often unable to tell you how it is they do what they do. I’ve noticed that John’s Twitter bio reads “I like the beautiful mess of product development” — and his intuitive expertise is exactly that: a beautiful, effective, but mostly tacit ‘mess’.

I'm pretty pleased that I got to help him explicate some parts of his expertise. God knows how long he took to build it.

I’m looking for other opportunities to put ACTA to practice. I have a couple of candidates in mind — the most important of which is my former technical lead (who has good programming taste), and whose code taste was what started me down this path in the first place. For what it’s worth, I’ve signed up and paid for the Self Study course offered by the CTA Institute — which is a training organisation created by Militello, Hutton and Moon. I’m hoping that I’ll get some pointers on simulation design by the end of it.

If you’ve read to the end of this piece, I’d like to encourage you to give ACTA a try. Go find someone who’s willing to spend some time with you, who has some expertise that you might want to explicate for yourself (or even for them!) The method isn't that difficult to do. More importantly, you might surprise your subject by uncovering elements of their expertise that they didn’t already know.

To be honest, I've found that last bit to be the most rewarding part of putting ACTA to practice. The look of delight on John's face at the end of our two calls was pretty damned awesome. Godspeed, and good luck.

Originally published , last updated .